About Canary

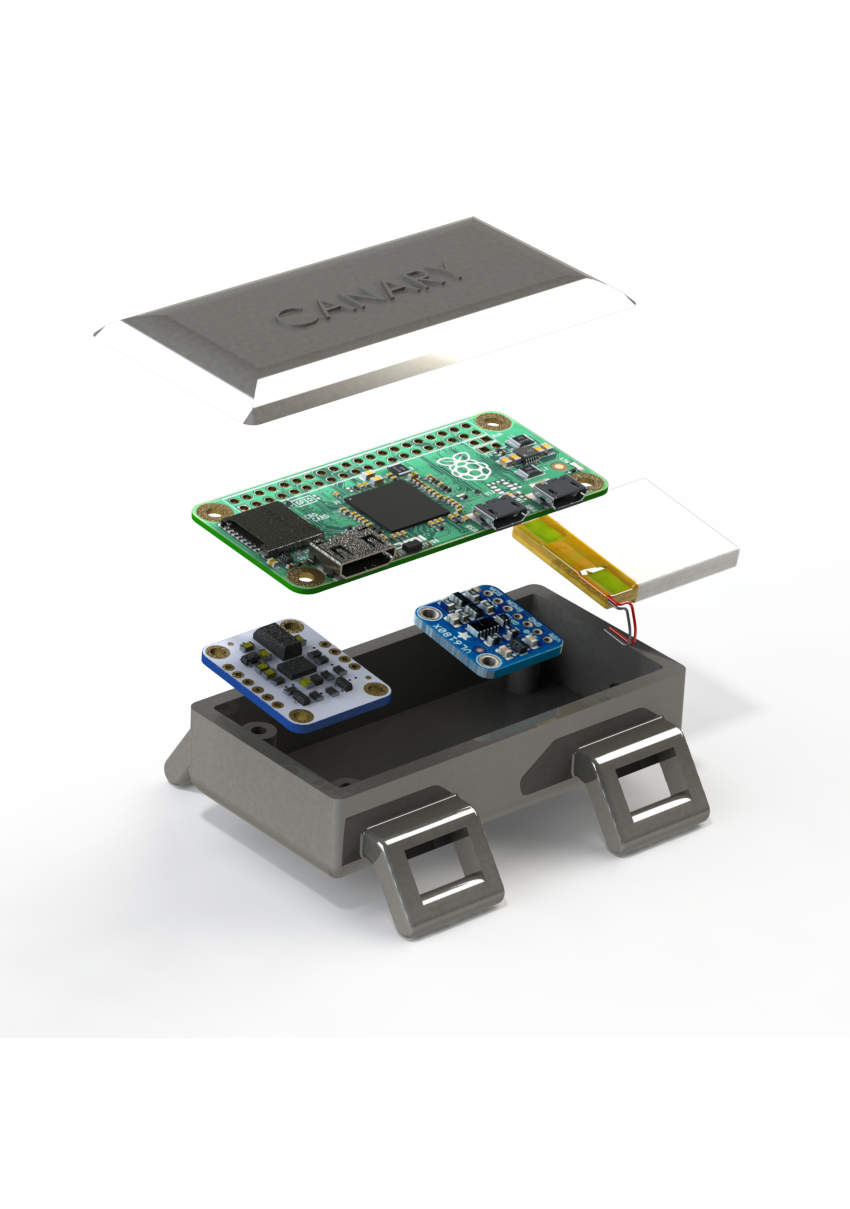

The purpose of the system is to design a low power wearable device that converts real-time sensory data to instrumental music for musical therapy sessions for the patients of dementia.

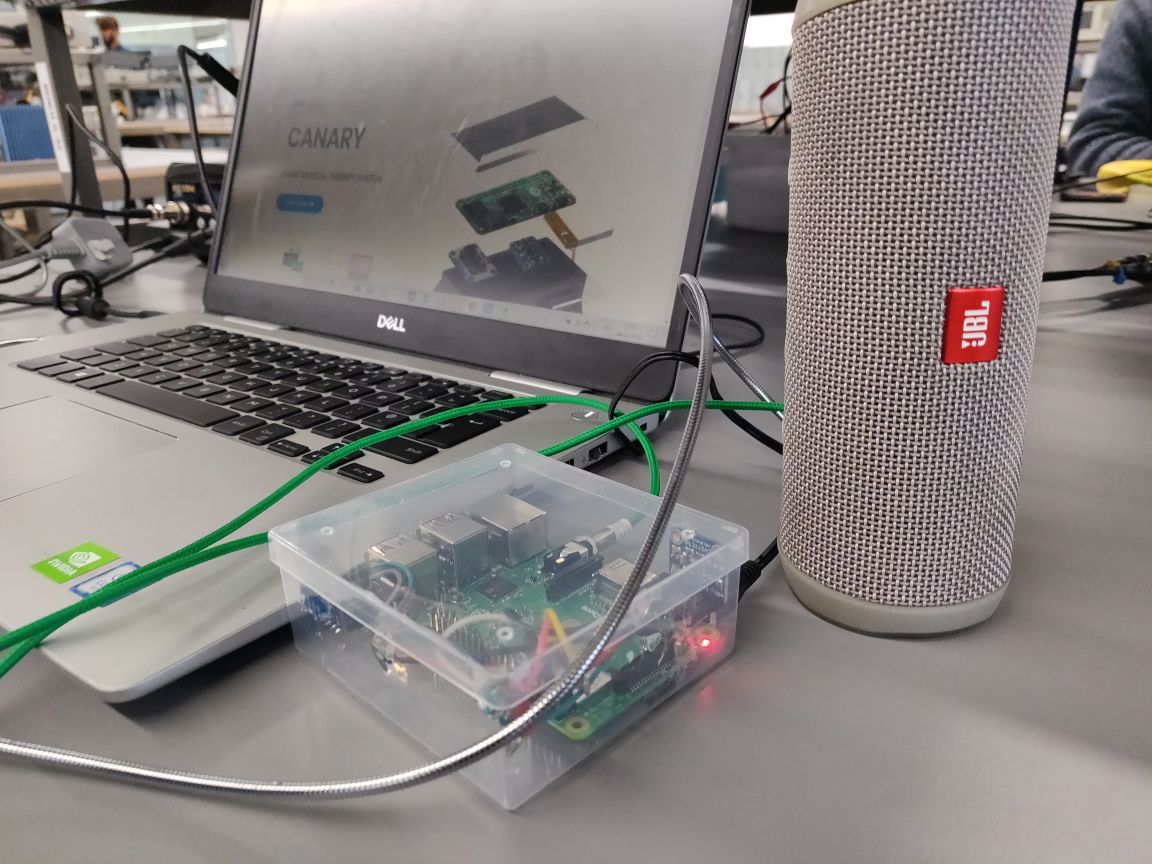

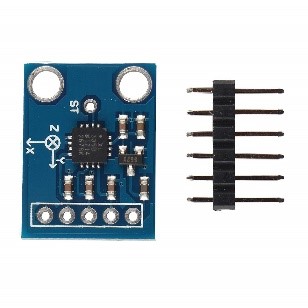

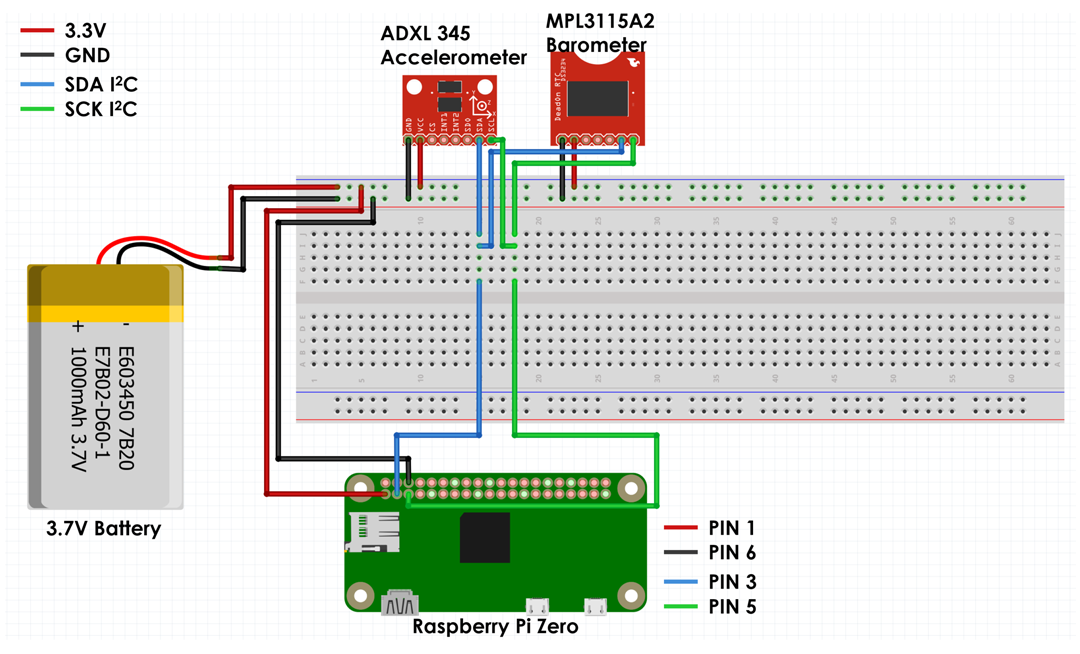

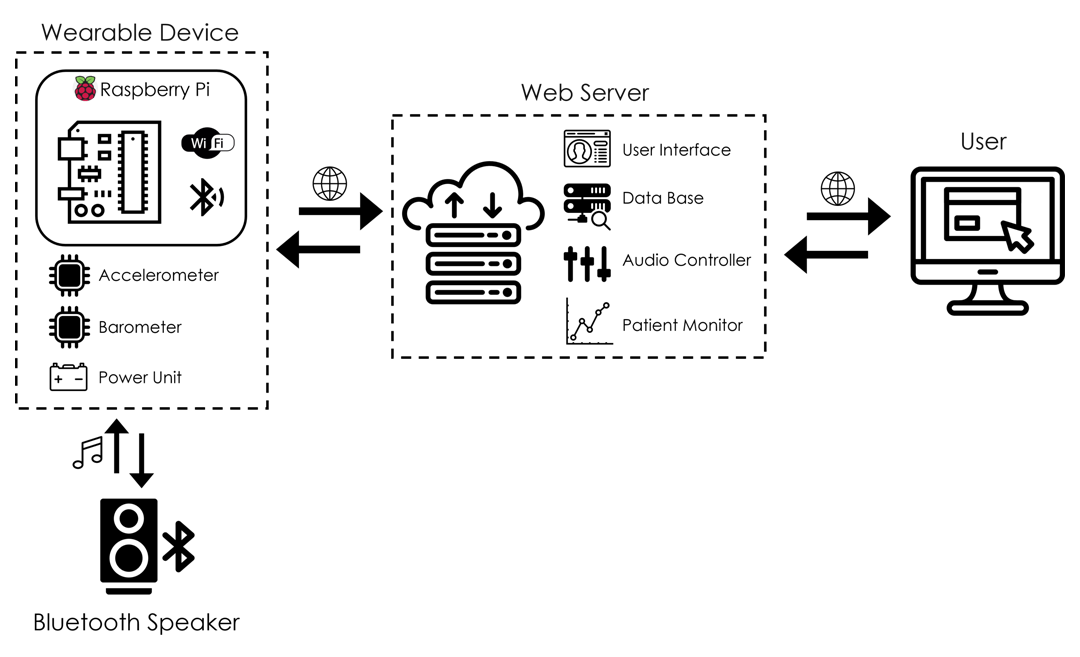

The wearable device will send accelerometer (ADXL335) and barometer (MPL3115A2) data to the sonification module which is then visualized to a soothing music relating to motion state of the patient and play it using a Bluetooth speaker. Furthermore, this data is sent to Plotly for graphical visualizing of the patient’s motion state.

There is a user interface where the user will be able to control volume of the sound and manage the sonification device. In addition, a care giver can monitor the motion state of the patient through real time plot of movement data of the patient from accelerometer sensor.

Hardware Configuration

The Components

ADXL335 Accelerometer

Raspberry pi 3

Barometer (MPL3115A2)

Battery

Schematic diagram

System Architecture

The project has two main sections. One is wearable device, which is packed with sensors, raspberry pi zero and the rechargeable power unit, and another is the web application which will allow user to remotely control the sensors, the audio, and log data. The remote connection is established via internet by connecting the raspberry pi to the server. The web app will be configured to connect to the server and control the Raspberry Pi remotely will be possible.

Software Configuration

Cloud Platform used

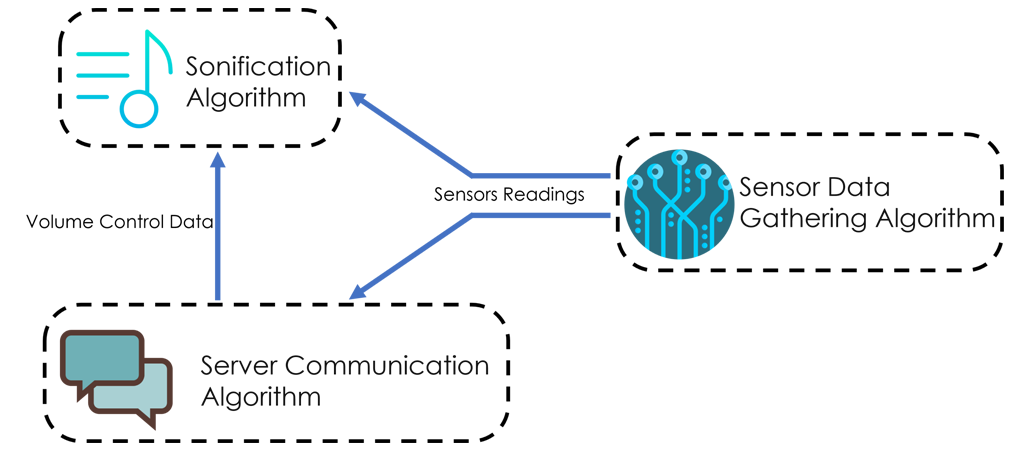

Algorithm architecture

Device-End Code

Canary App

Cloud platforms used

Cloud VPS (KVM)

The Cloud Platform is a cloud computing solution based on KVM, Linux’s Kernel-based Virtual Machine. It offers scalable, virtualized resources as a service, purchased on a utility basis. We used it to design our customized near-instant access to the cloud platform.

Plot.ly

Plotly is technical computing company headquartered in montreal, Quebec, that develops online data analytics and visualization tools. Plotly provides online graphing, analytics, and statistics tools for individuals and collaboration, as well as scientific graphing libraries for python, R, MATLAB, Perl, Julia, Arduino and Rest.

Dataplicity

Dataplicity is a service that aims to simplify the process of connecting to devices over the internet. The concept behind Dataplicity is to simplify the process of making a Raspberry Pi available over the internet. Dataplicity uses an opportunistically connected, client- initiated, secure HTTPS connection to the Dataplicity IOT Router. It works on satellite, cellular and fixed networks, even if the device cannot be pinged directly – all it needs is a functioning internet connection.

Algorithm Architecture

Code

import time

import board

import busio

import adafruit_adxl34x

import numpy

import smbus

import datetime

import sonify

import plotly.plotly as py# plotly library

from plotly.graph_objs import Scatter, Layout, Figure # plotly graph objects

import json

import requests

import os

def main():

username = ‘messay’

api_key = ‘aGWxBNUA1wgFnC8xkY66’

stream_token = ‘8g0wx06s37′

py.sign_in(username, api_key)

trace1 = Scatter(

x=[],

y=[],

stream=dict(

token=stream_token,

maxpoints=200

)

)

layout = Layout(

title=’Canary Patient Motion Monitor’

)

fig = Figure(data=[trace1], layout=layout)

print (py.plot(fig, filename=’Canary Patient Motion Monitor Values’))

stream = py.Stream(stream_token)

stream.open()

Sonifier=sonify.Sonifier(audioDeviceId=2)

i2c = busio.I2C(board.SCL, board.SDA)

bus = smbus.SMBus(1)

control_url=”https://canaryplay.isensetune.com/app/param.json”

accelerometer = adafruit_adxl34x.ADXL345(i2c)

while True:

r=requests.get(control_url)

d=json.loads(r.content)

bus.write_byte_data(0x60, 0x26, 0x39)

time.sleep(1)

data = bus.read_i2c_block_data(0x60, 0x00, 4)

# Convert the data to 20-bits

pres = ((data[1] * 65536) + (data[2] * 256) + (data[3] & 0xF0)) / 16

pressure = (pres / 4.0) / 1000.0

#print (“Pressure : %.2f kPa” %pressure)

time.sleep(0.2)

a=accelerometer.acceleration

b=numpy.sqrt(numpy.square(a[0])+numpy.square(a[1])+numpy.square(a[2]))

#print(“Acceleration : %f” %b)

key,baroSd=Sonifier.mapDataToMidiRange(b,pressure)

stream.write({‘x’: datetime.datetime.now(), ‘y’: b})

Sonifier.playAudio(key,int(d[‘volume’]),baroSd+int(d[‘pitch’]),d[‘instrument’])

if __name__==’__main__’:

main()

######################################

from scipy.interpolate import interp1d

import io

from time import sleep

import pygame.midi

import pygame

from constants import *

from sensors import *

import math

import time

import random

class RunningStats:

“””

Here is a literal pure Python translation of the Welford’s algorithm implementation from

http://www.johndcook.com/standard_deviation.html:

https://github.com/liyanage/python-modules/blob/master/running_stats.py

“””

def __init__(self):

self.n = 0

self.old_m = 0

self.new_m = 0

self.old_s = 0

self.new_s = 0

def clear(self):

self.n = 0

def push(self, x):

self.n += 1

if self.n == 1:

self.old_m = self.new_m = x

self.old_s = 0

else:

self.new_m = self.old_m + (x – self.old_m) / self.n

self.new_s = self.old_s + (x – self.old_m) * (x – self.new_m)

self.old_m = self.new_m

self.old_s = self.new_s

def mean(self):

return self.new_m if self.n else 0.0

def variance(self):

return self.new_s / (self.n – 1) if self.n > 1 else 0.0

def standard_deviation(self):

return math.sqrt(self.variance())

class Sonifier:

def __init__(self,sensors=[‘Accelerometer’],

instrument=’electric guitar (clean)’,

audioDeviceId=0,delay=0.7):

self.prevAcceleroVal=0

self.prevBaroVal=0

self.sensors=sensors

# self.n = 0

# self.old_m = 0

# self.new_m = 0

# self.old_s = 0

# self.new_s = 0

self.acceleroDeviator=RunningStats()

self.baroDeviator=RunningStats()

self.instrument = INSTRUMENTS[instrument]

self.volume=127

self.prevKey=0

self.audioDeviceID=audioDeviceId

self.delay=delay

self.pitch=0

self.player=”

def deletePlayer(self):

pygame.midi.init()

del self.player

pygame.midi.quit()

def changeInstrument(self,instrument):

pygame.midi.init()

self.instrument = INSTRUMENTS[instrument]

self.player.set_instrument(self.instrument)

pygame.midi.quit()

def changePitch(self,val):

pygame.midi.init()

self.player=pygame.midi.Output(self.audioDeviceID)

self.player.set_instrument(self.instrument)

self.player.pitch_bend(val)

del self.player

pygame.midi.quit()

def playAudio(self,key,volume,pitch,instrument):

pygame.midi.init()

print(pygame.midi.get_count())

self.player=pygame.midi.Output(self.audioDeviceID)

self.instrument=instrument

self.player.set_instrument(INSTRUMENTS[self.instrument])

self.player.pitch_bend(pitch,self.audioDeviceID)

self.player.note_on(key, volume)

self.delay=random.uniform(0.5,0.8)

time.sleep(self.delay)

self.player.note_off(key, volume)

del self.player

pygame.midi.quit()

def soothingSound(self,dataPoint):

if(self.prevAcceleroVal==0):

self.prevAcceleroVal=dataPoint

self.acceleroDeviator.push(dataPoint)

self.acceleroDeviator.mean()

sd=self.acceleroDeviator.standard_deviation()

if(dataPointself.prevAcceleroVal) and not (dataPoint+sd>ADXL345[‘2G’][1]):

dataPoint=dataPoint+sd

dataPoint = ADXL345[‘2G’][0] if dataPoint < ADXL345['2G'][0] else ADXL345['2G'][1] if dataPoint > ADXL345[‘2G’][1] else dataPoint

return dataPoint

def soothingPitch(self,dataPoint):

if(self.prevBaroVal==0):

self.prevBaroVal=dataPoint

self.baroDeviator.push(dataPoint)

self.baroDeviator.mean()

sd=self.baroDeviator.standard_deviation()

# if(dataPointself.prevBaroVal) and not (dataPoint+sd>BMP180[1]):

# dataPoint=dataPoint+sd

# dataPoint = BMP180[0] if dataPoint < BMP180[0] else BMP180[1] if dataPoint > BMP180[1] else dataPoint

#return dataPoint

return sd

def mapDataToMidiRange(self,accDataPoint,baroDataPoint):

“””

midi notes have a range of 0 – 127. Make sure the data is in that range

data: list of tuples of x, y coordinates for pitch and timing

min: min data value, defaults to 0

max: max data value, defaults to 127

return: data, but y normalized to the range specified by min and max

“””

accDataPoint=self.soothingSound(accDataPoint)

baroSd=int(self.soothingPitch(baroDataPoint))*10

m=interp1d([ADXL345[‘2G’][0],ADXL345[‘2G’][1]],[MIDI_RANGE_LOW,MIDI_RANGE_HIGH])

key=m(accDataPoint)

key=int(key)

if(self.acceleroDeviator.n==20):

self.acceleroDeviator.clear()

self.prevAcceleroVal=accDataPoint

return key,baroSd

############DATA FORMATTER##############

def clear(self):

self.n = 0

def push(self, x):

self.n += 1

if self.n == 1:

self.old_m = self.new_m = x

self.old_s = 0

else:

self.new_m = self.old_m + (x – self.old_m) / self.n

self.new_s = self.old_s + (x – self.old_m) * (x – self.new_m)

self.old_m = self.new_m

self.old_s = self.new_s

def mean(self):

return self.new_m if self.n else 0.0

def variance(self):

return self.new_s / (self.n – 1) if self.n > 1 else 0.0

def standard_deviation(self):

return math.sqrt(self.variance())

######################################

dataThreshold=100

NOTES = [

[‘C’], [‘C#’, ‘Db’], [‘D’], [‘D#’, ‘Eb’], [‘E’], [‘F’], [‘F#’, ‘Gb’],

[‘G’], [‘G#’, ‘Ab’], [‘A’], [‘A#’, ‘Bb’], [‘B’]

]

MIDI_RANGE_LOW=0

MIDI_RANGE_HIGH=127

def get_keys():

base_keys = {

‘c_major’: [‘C’, ‘D’, ‘E’, ‘F’, ‘G’, ‘A’, ‘B’],

‘d_major’: [‘D’, ‘E’, ‘F#’, ‘G’, ‘A’, ‘B’, ‘C#’],

‘e_major’: [‘E’, ‘F#’, ‘G#’, ‘A’, ‘B’, ‘C#’, ‘D#’],

‘f_major’: [‘F’, ‘G’, ‘A’, ‘Bb’, ‘C’, ‘D’, ‘E’, ‘F’],

‘g_major’: [‘G’, ‘A’, ‘B’, ‘C’, ‘D’, ‘E’, ‘F#’],

‘a_major’: [‘A’, ‘B’, ‘C#’, ‘D’, ‘E’, ‘F#’, ‘G#’, ‘A’],

‘b_major’: [‘B’, ‘C#’, ‘D#’, ‘E’, ‘F#’, ‘G#’, ‘A#’, ‘B’],

‘c_sharp_major’: [‘Db’, ‘Eb’, ‘F’, ‘Gb’, ‘Ab’, ‘Bb’, ‘C’, ‘Db’],

‘d_sharp_major’: [‘Eb’, ‘F’, ‘G’, ‘Ab’, ‘Bb’, ‘C’, ‘D’],

‘f_sharp_major’: [‘F#’, ‘G#’, ‘A#’, ‘B’, ‘C#’, ‘D#’, ‘F’, ‘F#’],

‘g_sharp_major’: [‘Ab’, ‘Bb’, ‘C’, ‘Db’, ‘Eb’, ‘F’, ‘G’, ‘Ab’],

‘a_sharp_major’: [‘Bb’, ‘C’, ‘D’, ‘Eb’, ‘F’, ‘G’, ‘A’, ‘Bb’]

}

base_keys[‘d_flat_major’] = base_keys[‘c_sharp_major’]

base_keys[‘e_flat_major’] = base_keys[‘d_sharp_major’]

base_keys[‘g_flat_major’] = base_keys[‘f_sharp_major’]

base_keys[‘a_flat_major’] = base_keys[‘g_sharp_major’]

base_keys[‘b_flat_major’] = base_keys[‘a_sharp_major’]

return base_keys

KEYS = get_keys()

# Instrument and Percussion map from

# https://www.midi.org/specifications/item/gm-level-1-sound-set

INSTRUMENTS = {

‘accordion’: 22,

‘acoustic bass’: 33,

‘acoustic grand piano’: 1,

‘acoustic guitar (nylon)’: 25,

‘acoustic guitar (steel)’: 26,

‘agogo’: 114,

‘alto sax’: 66,

‘applause’: 127,

‘bagpipe’: 110,

‘banjo’: 106,

‘baritone sax’: 68,

‘bassoon’: 71,

‘bird tweet’: 124,

‘blown bottle’: 77,

‘brass section’: 62,

‘breath noise’: 122,

‘bright acoustic piano’: 2,

‘celesta’: 9,

‘cello’: 43,

‘choir aahs’: 53,

‘church organ’: 20,

‘clarinet’: 72,

‘clavi’: 8,

‘contrabass’: 44,

‘distortion guitar’: 31,

‘drawbar organ’: 17,

‘dulcimer’: 16,

‘electric bass (finger)’: 34,

‘electric bass (pick)’: 35,

‘electric grand piano’: 3,

‘electric guitar (clean)’: 28,

‘electric guitar (jazz)’: 27,

‘electric guitar (muted)’: 29,

‘electric piano 1’: 5,

‘electric piano 2’: 6,

‘english horn’: 70,

‘fiddle’: 111,

‘flute’: 74,

‘french horn’: 61,

‘fretless bass’: 36,

‘fx 1 (rain)’: 97,

‘fx 2 (soundtrack)’: 98,

‘fx 3 (crystal)’: 99,

‘fx 4 (atmosphere)’: 100,

‘fx 5 (brightness)’: 101,

‘fx 6 (goblins)’: 102,

‘fx 7 (echoes)’: 103,

‘fx 8 (sci-fi)’: 104,

‘glockenspiel’: 10,

‘guitar fret noise’: 121,

‘guitar harmonics’: 32,

‘gunshot’: 128,

‘harmonica’: 23,

‘harpsichord’: 7,

‘helicopter’: 126,

‘honky-tonk piano’: 4,

‘kalimba’: 109,

‘koto’: 108,

‘lead 1 (square)’: 81,

‘lead 2 (sawtooth)’: 82,

‘lead 3 (calliope)’: 83,

‘lead 4 (chiff)’: 84,

‘lead 5 (charang)’: 85,

‘lead 6 (voice)’: 86,

‘lead 7 (fifths)’: 87,

‘lead 8 (bass + lead)’: 88,

‘marimba’: 13,

‘melodic tom’: 118,

‘music box’: 11,

‘muted trumpet’: 60,

‘oboe’: 69,

‘ocarina’: 80,

‘orchestra hit’: 56,

‘orchestral harp’: 47,

‘overdriven guitar’: 30,

‘pad 1 (new age)’: 89,

‘pad 2 (warm)’: 90,

‘pad 3 (polysynth)’: 91,

‘pad 4 (choir)’: 92,

‘pad 5 (bowed)’: 93,

‘pad 6 (metallic)’: 94,

‘pad 7 (halo)’: 95,

‘pad 8 (sweep)’: 96,

‘pan flute’: 76,

‘percussive organ’: 18,

‘piccolo’: 73,

‘pizzicato strings’: 46,

‘recorder’: 75,

‘reed organ’: 21,

‘reverse cymbal’: 120,

‘rock organ’: 19,

‘seashore’: 123,

‘shakuhachi’: 78,

‘shamisen’: 107,

‘shanai’: 112,

‘sitar’: 105,

‘slap bass 1’: 37,

‘slap bass 2’: 38,

‘soprano sax’: 65,

‘steel drums’: 115,

‘string ensemble 1’: 49,

‘string ensemble 2’: 50,

‘synth bass 1’: 39,

‘synth bass 2’: 40,

‘synth drum’: 119,

‘synth voice’: 55,

‘synthbrass 1’: 63,

‘synthbrass 2’: 64,

‘synthstrings 1’: 51,

‘synthstrings 2’: 52,

‘taiko drum’: 117,

‘tango accordion’: 24,

‘telephone ring’: 125,

‘tenor sax’: 67,

‘timpani’: 48,

‘tinkle bell’: 113,

‘tremolo strings’: 45,

‘trombone’: 58,

‘trumpet’: 57,

‘tuba’: 59,

‘tubular bells’: 15,

‘vibraphone’: 12,

‘viola’: 42,

‘violin’: 41,

‘voice oohs’: 54,

‘whistle’: 79,

‘woodblock’: 116,

‘xylophone’: 14

}

PERCUSSION = {

‘acoustic bass drum’: 35,

‘acoustic snare’: 38,

‘bass drum 1’: 36,

‘cabasa’: 69,

‘chinese cymbal’: 52,

‘claves’: 75,

‘closed hi hat’: 42,

‘cowbell’: 56,

‘crash cymbal 1’: 49,

‘crash cymbal 2’: 57,

‘electric snare’: 40,

‘hand clap’: 39,

‘hi bongo’: 60,

‘hi wood block’: 76,

‘hi-mid tom’: 48,

‘high agogo’: 67,

‘high floor tom’: 43,

‘high timbale’: 65,

‘high tom’: 50,

‘long guiro’: 74,

‘long whistle’: 72,

‘low agogo’: 68,

‘low bongo’: 61,

‘low conga’: 64,

‘low floor tom’: 41,

‘low timbale’: 66,

‘low tom’: 45,

‘low wood block’: 77,

‘low-mid tom’: 47,

‘maracas’: 70,

‘mute cuica’: 78,

‘mute hi conga’: 62,

‘mute triangle’: 80,

‘open cuica’: 79,

‘open hi conga’: 63,

‘open hi-hat’: 46,

‘open triangle’: 81,

‘pedal hi-hat’: 44,

‘ride bell’: 53,

‘ride cymbal 1’: 51,

‘ride cymbal 2’: 59,

‘short guiro’: 73,

‘short whistle’: 71,

‘side stick’: 37,

‘splash cymbal’: 55,

‘tambourine’: 54,

‘vibraslap’: 58

}

ADXL345={

‘2G’:[7,13]

}

BMP180=[50,500]

Canary App

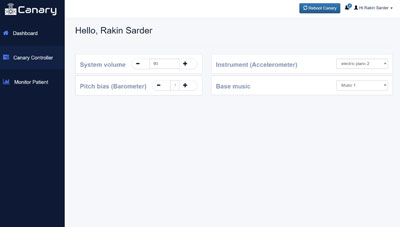

This web application is a generalized control centre for the user where music settings such as volume, pitch, background music and instrument can be adjusted in addition to monitoring the patient in real-time movement data plot.

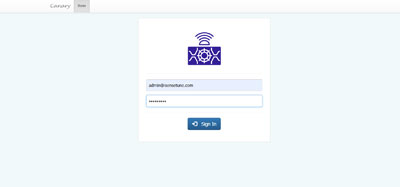

Authenticate

In order to use Canary, a user have to log in with user name and password credentials.

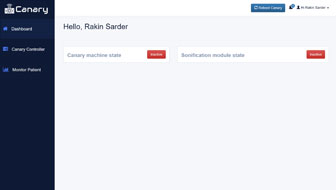

dashboard

It will take you to web application’s dashboard from which the user can check device status. The user can also reboot the device anytime using the ‘Reboot Canary’ button on the top right corner at any instant within the web application.

Control center

From the sidebar, the user can navigate to the ‘Canary Control Center’ where the device’s music settings can be adjusted. These settings include volume, pitch, instrumental device and background music control.

Motion monitor

A user can monitor the state of a patient by navigating to the ‘Monitor Patient’ sidebar. The patient’s movement state can be monitored by a real-time movement data plot in the ‘monitor patient section’.

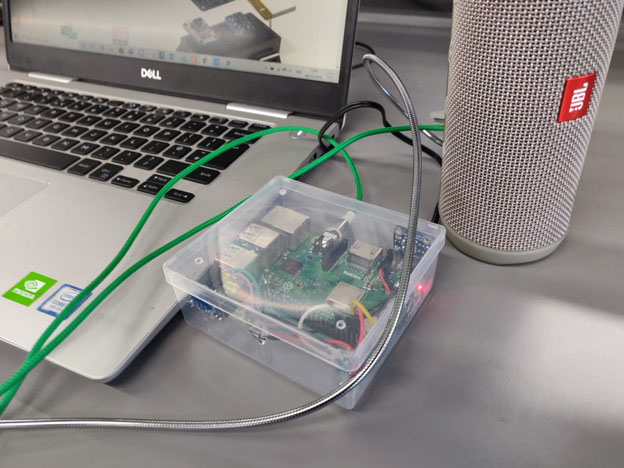

Project demonstration

Limitations

- Not packaged into a commercial device standard because of material constraint

- The project was initially planned to be carried out using Raspberry Pi Zero but due to time constraints the adoption of the project to Raspberry Pi Zero from Raspberry Pi 3 was not completed

- The device is not battery powered at this stage because the appropriate battery was not available

- There is a latency of 0.5s – 0.7s while playing the music notes

Future Plans

Make an enclosure for the device to make a consumer product

Make the device portable and rechargeable

Run user safety tests